AI Has a Credibility Problem. Here’s the Fix.

Good morning,

This week we’re looking at AI’s perception gap, platform moves, and why marketing teams are rethinking what success looks like.

First up: AI may be advancing fast, but trust isn’t keeping up. In Big Picture, we dig into how over-promising and under-delivering have created a credibility drag - and share a framework to help the Industry rebuild trust around AI.

Also in this issue:

Salesforce sees signs of life in enterprise AI spend

GPT-5.2 drops this week amid growing pressure from Gemini

Google’s AI glasses aim for 2026, while OpenAI's financial model faces scrutiny

And new data shows GenAI ads now beat human-made ads by 19% on click-throughs

In What Works, we look at how top marketers go beyond cost-cutting and start productizing AI to drive scale and revenue.

Plenty here for teams trying to turn AI from headline to impact.

- The Marketing Embeddings Team

Read on the Website

NEWS

Salesforce Inc. gave a stronger‑than‑expected revenue forecast for the current quarter, signalling that customers are beginning to spend more on its Agentforce artificial intelligence tools even as investors remain wary about the long‑term impact of AI on legacy software makers: Read More

GenAI-created ads see 19% higher click-through rates than human-created ads: Read More

The ChatGPT creator will unveil GPT-5.2 this week, The Verge reported, after OpenAI CEO Sam Altman declared a “code red” situation following the launch of Google Gemini 3 last month: Read More

The stock market has been showing signs in favor of Google over AI competition such as Open AI: Read More

Google on Monday said it plans to launch the first of its AI-powered glasses in 2026, as the tech company ramps up its efforts to compete against Meta in a heating consumer market for AI devices: Read More

BIG PICTURE

AI Has a Trust Problem, Not a Capability Problem

AI adoption isn’t slowing because the technology doesn’t work. It’s slowing because many people don’t trust it - and trust, more than access or awareness, has become the primary barrier to use.

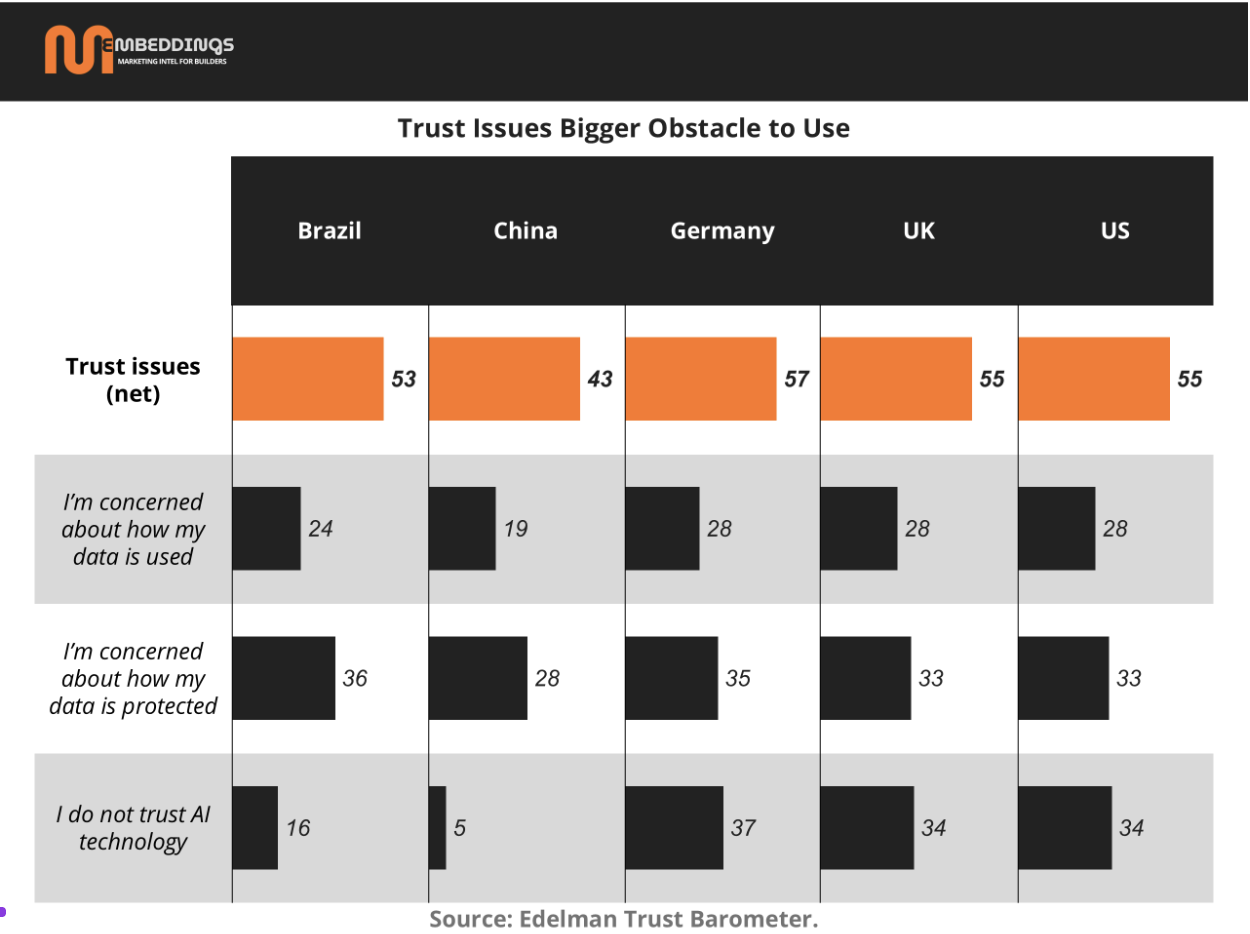

Across Edelman’s 2025 AI flash poll markets, people in countries like Germany, the UK, and the U.S. are more likely to say they reject the growing use of AI than embrace it. Trust in the technology itself lags well behind trust in the tech sector more broadly. Among people who use AI less than once a month, “trust issues” - concerns about data use, privacy, and whether the systems are reliable or honest - outweigh lack of interest, lack of access, or feeling intimidated by the tools. Around half of respondents say they worry more about being left behind by AI than benefiting from it, with that anxiety particularly strong among lower-income groups and in many advanced economies.

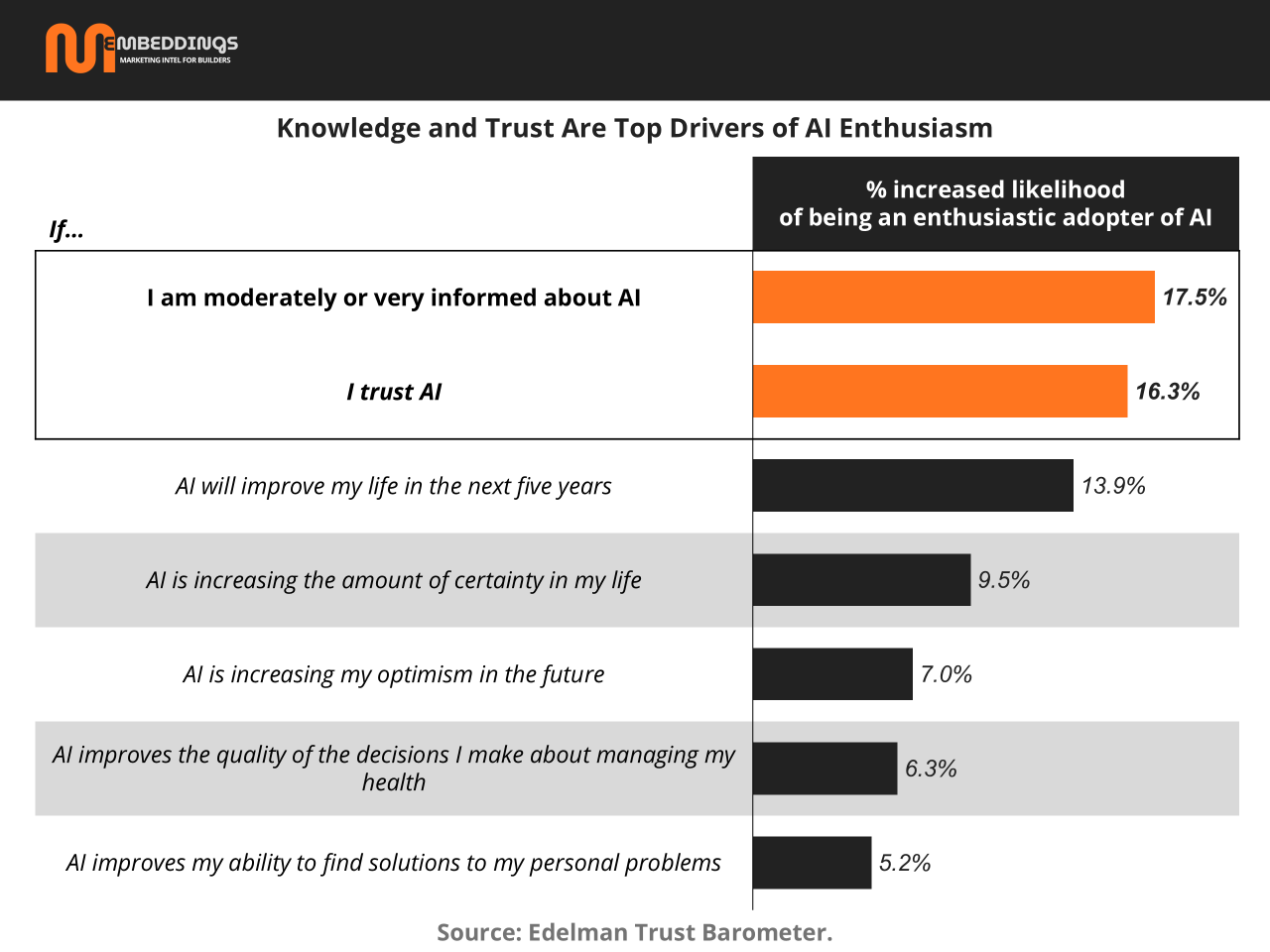

Edelman’s regression analysis makes the point explicit: being moderately or very informed about AI and trusting AI are the two strongest predictors of whether someone becomes an enthusiastic adopter, ahead of any single promise about productivity, creativity, or future economic gains. Crucially, outright bad personal experiences with AI remain relatively rare, even among people who say they reject it. That suggests adoption is being held back less by product failure than by perception, narratives, and concerns about power and fairness. This is not just a technology problem, it’s a trust and communication problem.

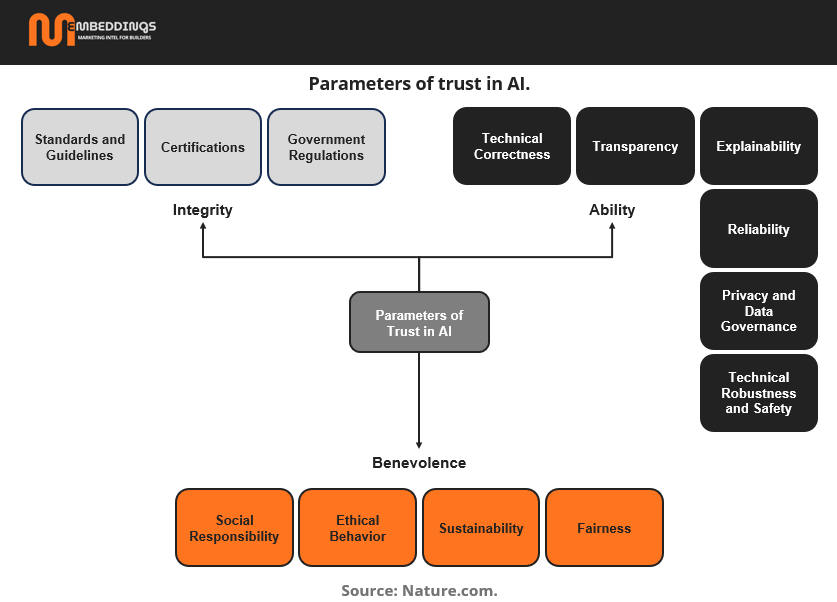

That distinction is clarified by the Nature review on trust in AI, which synthesizes decades of research and shows that people tend to evaluate AI systems along three classic trust dimensions: ability, integrity, and benevolence.

Ability asks whether AI systems are accurate, reliable, and fit for purpose in real-world conditions.

Integrity concerns transparency, explainability, consistency with rules and norms, and whether limitations and risks are honestly disclosed.

Benevolence reflects whether the system is perceived as acting in users’ interests — protecting data, avoiding hidden harms, and sharing benefits fairly rather than concentrating them among a few powerful actors.

Figure 3 in the Nature paper maps common trust factors — including accuracy, robustness, explainability, data governance, and fairness — into these three buckets for human–AI interactions. The framework helps explain why improvements in benchmark performance alone have not translated into public confidence. Ability matters, but it is only one-third of the trust equation.

Seen through this lens, AI’s “PR problem” becomes more legible. Confidently delivered but incorrect answers undermine perceived ability. Opaque systems, unclear data practices, and vague assurances weaken integrity. And widespread fears about job displacement, unequal access, and extractive data use erode benevolence, even when systems perform well technically.

The implication is straightforward but t be rebuilt through bigger claims or faster rollout. It requires verifiable performance, clear governance and disclosures, and a credible case that ordinary people, not just large firms or power users, benefit. Edelman shows us how deep the trust gap is; the Nature framework clarifies what it will actually take to close it. Until AI is experienced as capable, honest, and aligned with users’ interests, trust, not technology, will remain the binding constraint on adoption.

WHAT WORKS

AI pays off when it shifts marketing from efficiency to effectiveness

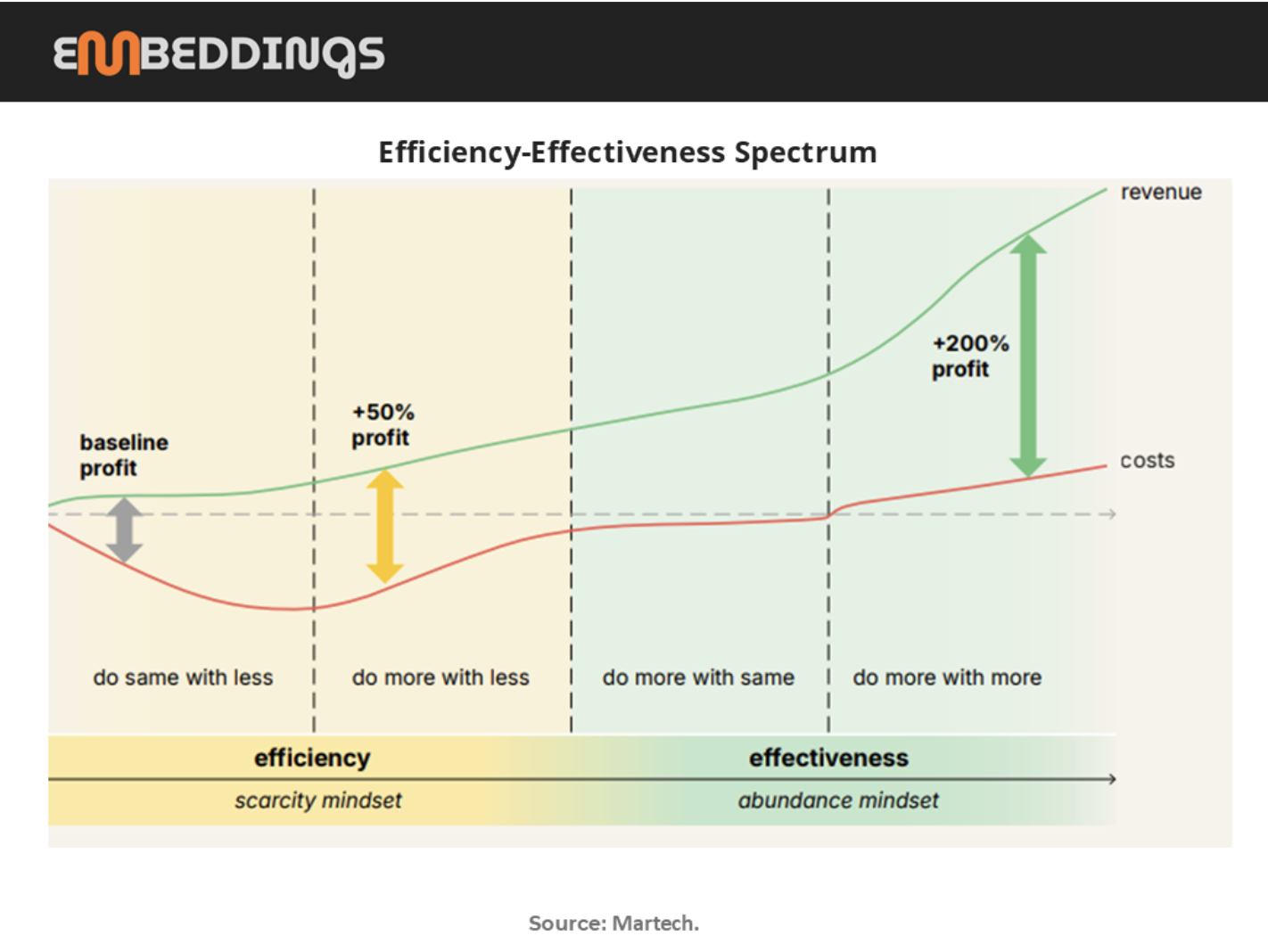

Most teams start using AI to do the same with less, automating tasks like content variants, reporting, or basic targeting. This drives cost savings, but the impact is limited.

The biggest wins come when companies move toward doing more, using AI to expand creative output, personalize at scale, and adapt campaigns faster than human teams alone. In this mode, AI doesn’t just reduce costs; it increases revenue. Even with higher spend, the profit gap widens dramatically. The lesson: AI creates outsized value when it’s used for growth, not just efficiency.

High performers productize AI instead of relying on one-off experiments

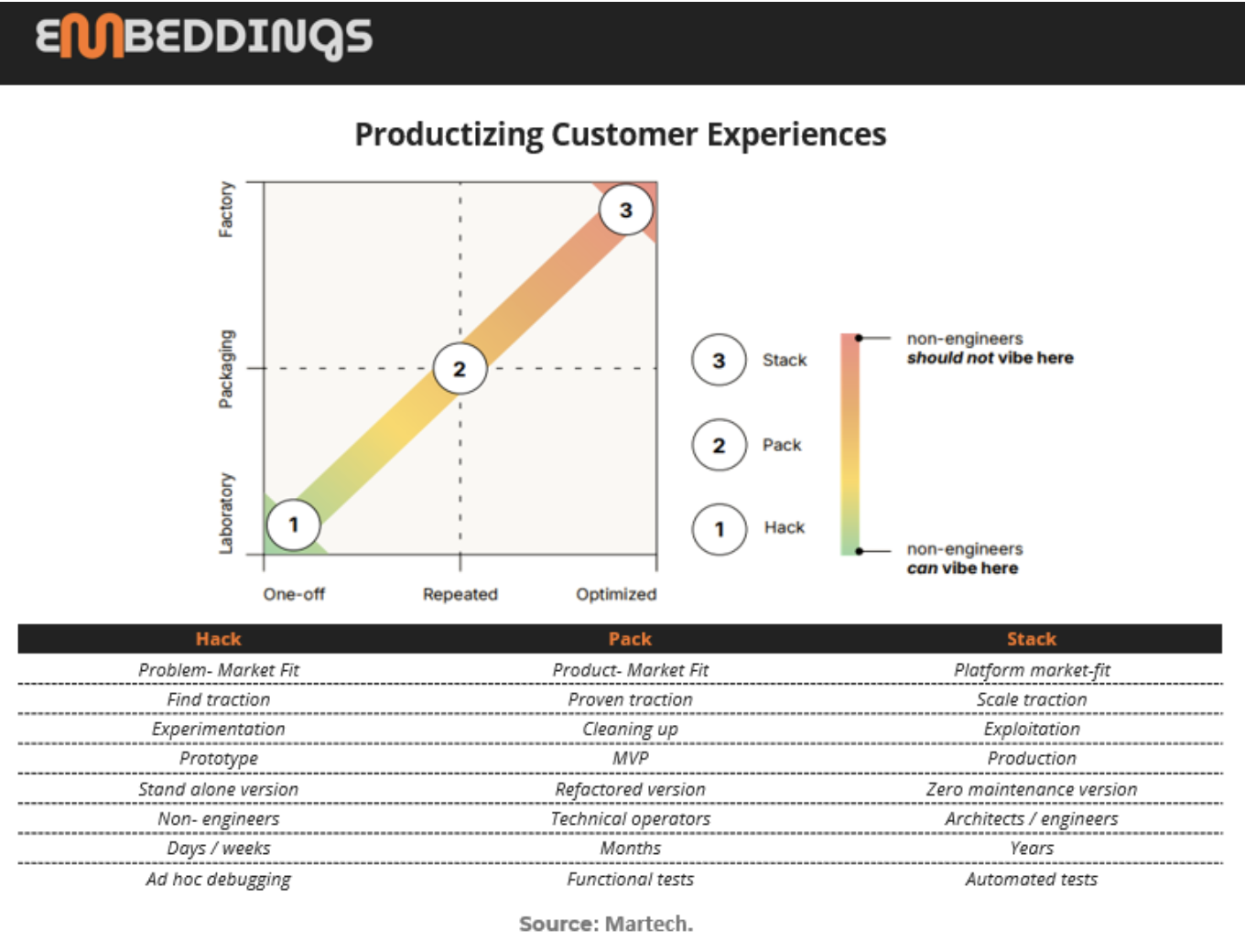

Successful teams don’t stop at AI “hacks.” They start with fast experiments, turn proven ideas into repeatable workflows, and eventually embed AI into scalable systems.

This progression, from hack to packaged solution to fully stacked product, is what turns AI from a novelty into a durable advantage. The strongest companies know when to move beyond experimentation and build AI directly into their marketing stack.

Bottom line: AI works best when it enables more ambition and is operationalized into systems that scale.