The Trust Gap Is Becoming AI’s Biggest Risk

Good morning FIRSTNAME,

This week, AI becomes unavoidable: CES embeds it in every device, Musk says we're entering singularity, and the questions are getting harder to ignore.

In News, Instagram’s Adam Mosseri warns we may soon lose the ability to distinguish real from fake online. Meta finalizes its multi-billion-dollar acquisition of Manus, signaling that AI agents are moving from the lab to the core. Amazon expands Alexa+ to the web, taking aim at browser-based assistants, and CES2026 puts AI chips, across data centers, PCs, and autonomous tech, at center stage.

In The Trust Issue, we unpack a looming question: what happens when we enter the singularity without entering consensus? Musk may call it a milestone, but for most of the public, it’s a warning. Until safety, governance, and public confidence catch up, the AI future remains fragile.

Finally, in Big Picture, we track a critical divergence: corporate confidence in AI is rising fast, but public trust is lagging behind. New research from Just Capital and the Future of Life Institute shows that while leaders anticipate massive upside, the public sees personal risk—and most AI companies aren’t ready to handle the consequences.

- The Marketing Embeddings Team

NEWS

Instagram boss Mosseri claims that we are reaching a point where we will not be able to realize what is real and what is not in our digital world (Read More)

Meta finalized the acquisition of AI agent startup Manus, with market estimates placing the deal between one to five billion dollars. Since launch, Manus has processed roughly 147 trillion tokens and created more than 80 million virtual machines. This move signaled that agents are no longer an experiment. They are being pulled into the core. (Read More)

Amazon launches Alexa+ on the web, expanding its AI assistant beyond Echo devices and positioning it more directly against browser-based AI tools like ChatGPT (Read More)

AI and advanced silicon chips are front and center at CES2026: Nvidia, AMD, and Intel highlighted new AI platforms and processors that target everything from data center workloads to personal computing and autonomous tech. (Read More)

THE TRUST ISSUE

When We Enter the Singularity Without Entering Consensus

Elon Musk declared last week that we've entered the singularity—not approaching it, but already in it. The claim itself matters less than what it signals: a growing consensus among AI leaders that we've crossed into a fundamentally different phase where progress compounds faster than institutions, regulations, or public understanding can track. The singularity, as a concept, describes the point where normal planning horizons break down because change itself is accelerating exponentially.

But here's the uncomfortable question: what happens when we enter the singularity without entering consensus on safety, control, or trust?

Two major research releases this month expose the fault lines. Just Capital's Trust Gap study and the Future of Life Institute's AI Safety Index reveal a system accelerating toward transformation while fundamental alignment, between capability and safety, between optimism and preparedness, between leadership and society, remains critically incomplete.

AI adoption is accelerating faster than public consensus or safety infrastructure. When we look at sentiment data alongside independent safety evaluations, a clear pattern emerges: confidence at the top, caution everywhere else, and a widening gap between optimism, trust, and objective preparedness. Together, the Just Capital Trust Gap research and the Future of Life Institute’s AI Safety Index expose the core tension shaping AI’s next phase.

BIG PICTURE

1. The Trust Gap: Optimism Without Alignment

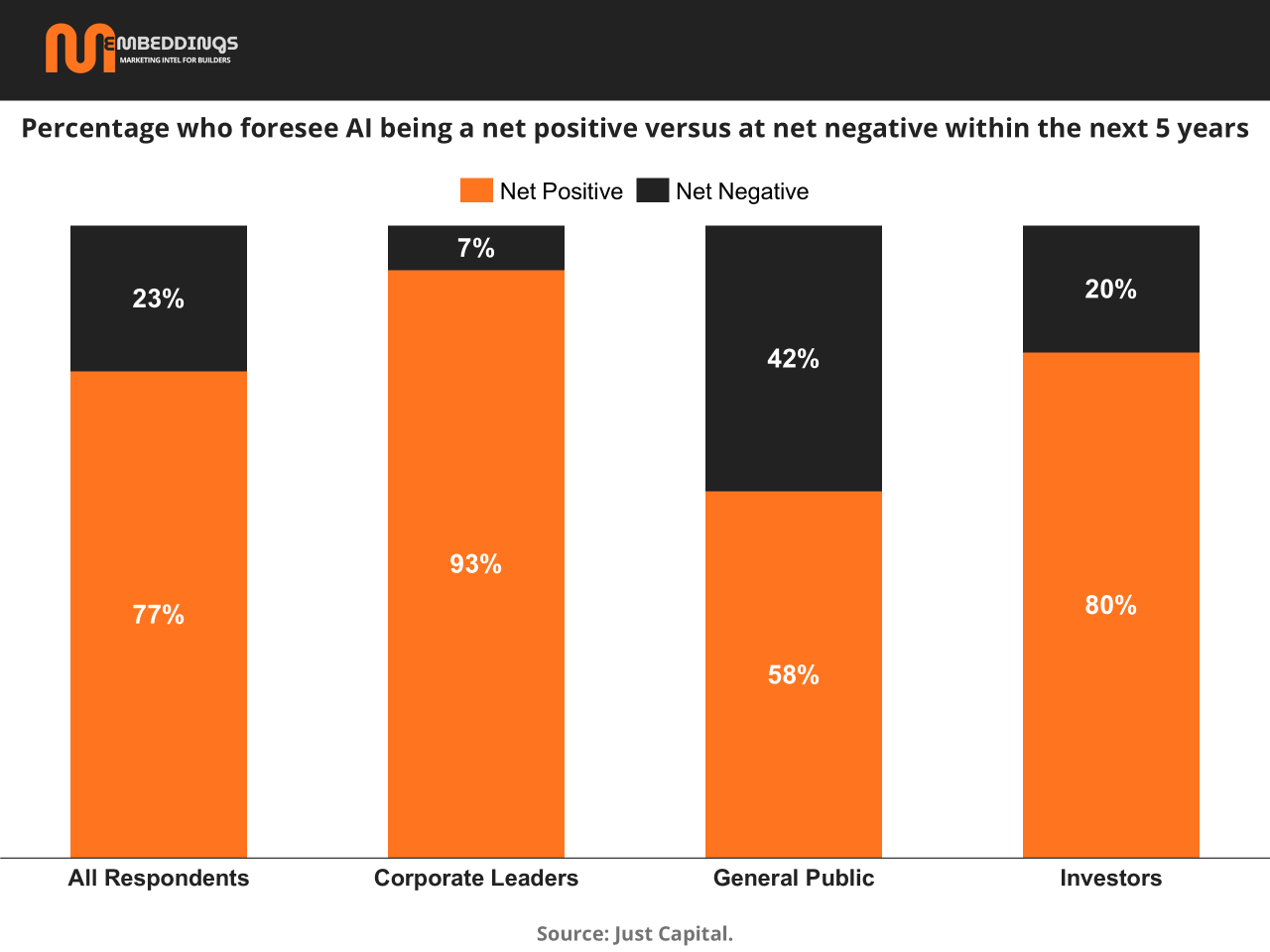

Corporate leaders and investors are overwhelmingly confident that AI will be a net positive over the next five years—93% of executives and 80% of investors hold this view. The general public, however, is far less convinced, with only 58% expecting a positive outcome. (More)

This divergence is not simply philosophical; it reflects fundamentally different lived experiences and risk perceptions. While leadership views AI primarily through the lens of growth, productivity, and competitiveness, the public weighs it against job security, social stability, environmental cost, and loss of control.

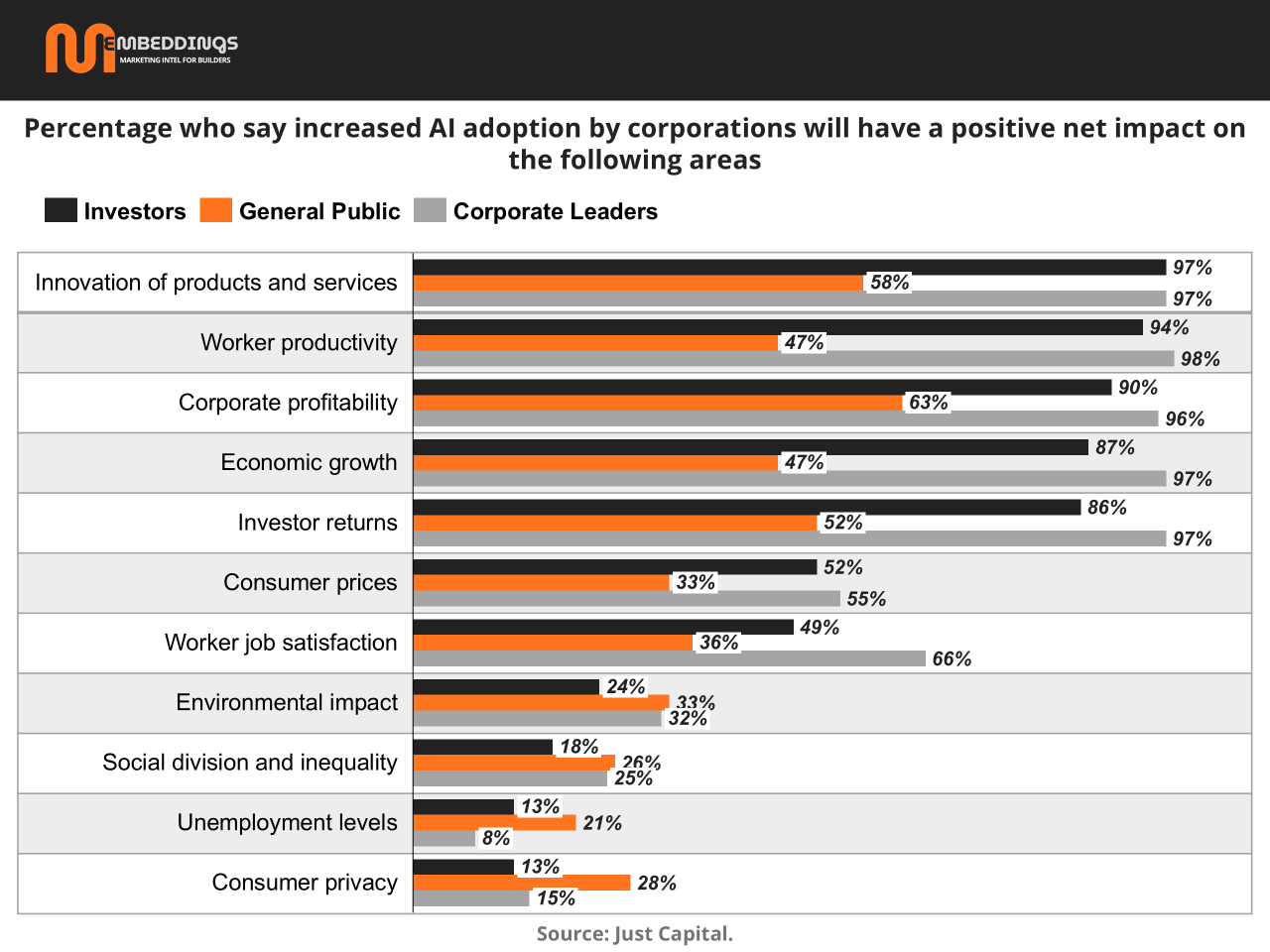

That difference becomes sharper when examining where AI is expected to deliver benefits. Executives and investors anticipate strong positive impact on innovation, productivity, profitability, and economic growth—often at levels above 90%. The public, by contrast, remains skeptical across nearly every category, especially worker productivity, job satisfaction, environmental impact, inequality, and privacy

This is the trust gap in concrete terms:

Leaders expect upside to materialize systemically

The public expects risk to materialize personally

Notably, all groups agree AI transformation is imminent—measured in months and years, not decades. This compresses the window for trust-building. As Just Capital’s research highlights, trust is now contingent on visible action: safety investment, workforce transition planning, and environmental accountability. Without progress in these areas, optimism alone is insufficient to secure legitimacy.

2. The Objective Evaluation: Capability Is Outpacing Safety

Where the Trust Gap reflects perception, the AI Safety Index provides an external reality check.

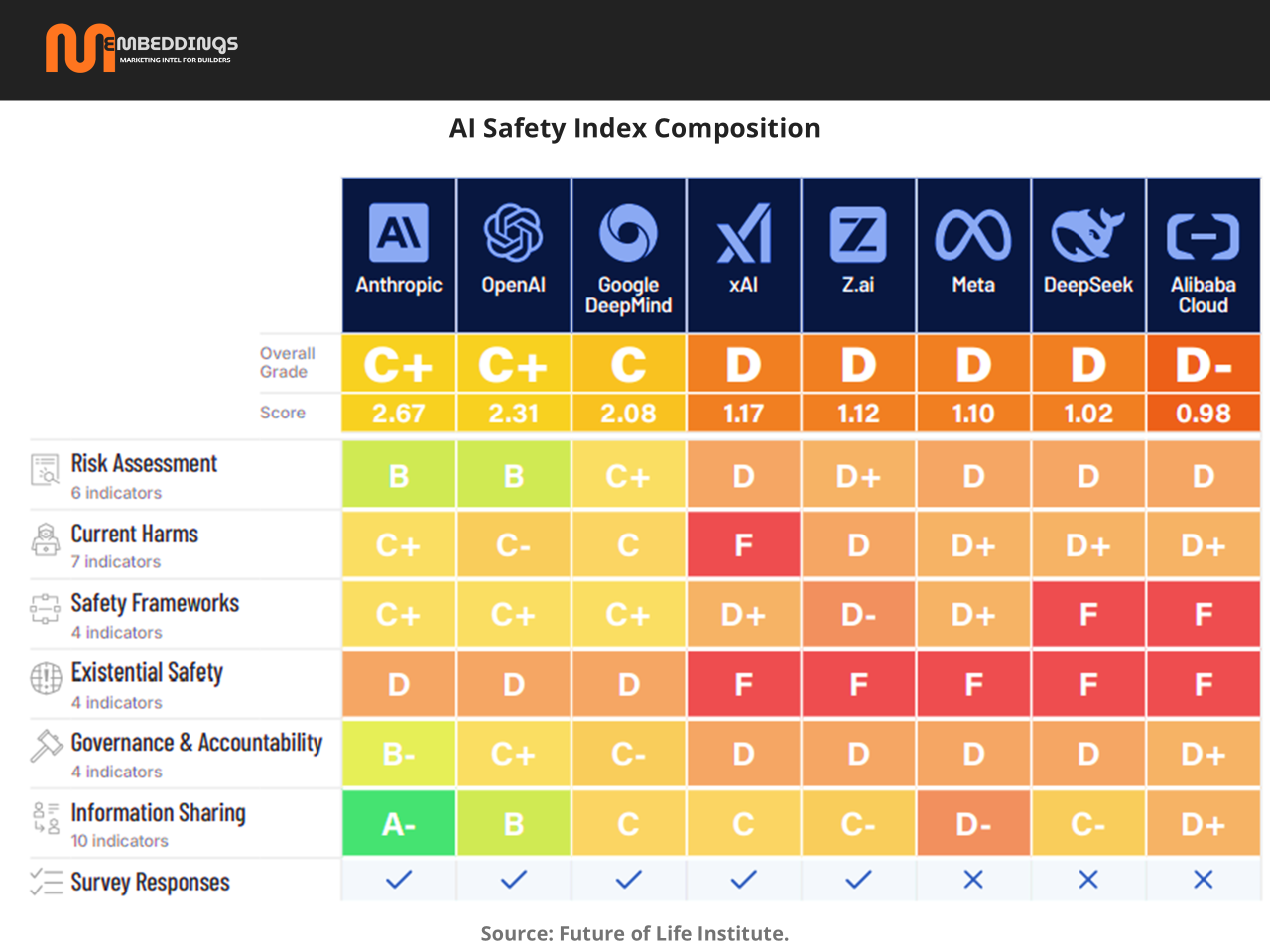

The Future of Life Institute’s AI Safety Index independently evaluated eight leading AI developers across 35 indicators spanning risk assessment, current harms, safety frameworks, governance, information sharing, and existential safety. The headline finding is stark: no company scored higher than a C+ overall.

Even the top performers—Anthropic, OpenAI, and Google DeepMind—fall well short of what the Index defines as adequate preparedness for advanced AI risks. The second tier of companies clusters in the D range, indicating substantial deficiencies across core safety domains.

The most critical failure is existential safety. For the second consecutive edition, no company scored above a D in planning for catastrophic or loss-of-control scenarios. Despite public statements acknowledging extreme risks, evaluators found no credible, measurable control strategies, thresholds, or enforcement mechanisms

Other systemic gaps reinforce this conclusion:

Risk assessments remain narrow, weakly validated, and insufficiently independent

Safety frameworks lack enforceable triggers and real-world mitigation authority

Governance structures, including whistleblower protections and oversight, are underdeveloped

Transparency and information sharing vary widely and often fall below emerging global standards

The result is a widening structural imbalance: AI capabilities are advancing rapidly, while safety practices evolve incrementally. As the Index concludes, the sector is increasingly unprepared for the risks it is actively creating.

Why This Matters

Taken together, these findings reveal a compounding risk:

Public trust is fragile and conditional

Corporate confidence is high but unevenly grounded

Objective safety readiness is inadequate across the industry

For marketers, builders, and investors, this is not an abstract governance debate. Trust, adoption, regulation, and long-term value creation are converging around one central question: can AI leaders demonstrate not just innovation, but control?

The next phase of AI competition will not be defined solely by model capability. It will be defined by who can credibly close the gap between optimism and accountability, speed and safety, and ambition and trust.